Secure-by-Design Agentic Systems: Practical Patterns by Mark Hewitt

Agentic AI is moving quickly from novelty to enterprise ambition. Organizations want agents that can interpret intent, execute workflows, coordinate across tools, and take action across systems. This promise is compelling because it introduces real operational leverage. It also introduces a new class of enterprise security exposure. Agents are not passive assistants. They are active actors. They can retrieve information, invoke tools, manipulate data, and initiate actions. In many cases, they operate with high-frequency access to sensitive systems and business processes.

This means agentic AI must be secured differently than traditional software. It must be treated like privileged infrastructure. The security posture of agentic systems will determine whether they can scale beyond low-impact use cases. Enterprises that treat agentic AI as a feature will encounter governance failures, incidents, and risk escalation. Enterprises that treat it as a secure-by-design capability will scale with confidence.

Why Agentic AI Expands the Attack Surface

Traditional applications generally expose a limited interface and a defined set of functions. Agentic systems are different. An agent expands the attack surface in four ways.

Tool access. Agents often connect to multiple tools and APIs. Each tool becomes part of the threat boundary.

Data access. Agents may query sensitive data and synthesize it into outputs. They can inadvertently expose restricted information.

Action capability. Agents can take action across systems, sometimes chaining actions together. This increases potential blast radius.

Natural language interface risk. Agents are often driven by language instructions, which introduces new exploitation methods such as prompt injection, tool manipulation, and retrieval poisoning.

Executives should view an agent as a privileged actor that needs identity, permissions, monitoring, and constrained authority.

Secure-by-Design Means Built-In Controls, Not Policies

Many enterprises approach AI security through policy and review. Those matter, but they do not secure runtime behavior. Secure-by-design means security is embedded into the architecture and operating model. It is enforced by controls, not dependent on behavior. In agentic systems, secure-by-design has three goals.

Limit what the agent can access

Limit what the agent can do

Detect and stop unsafe behavior quickly

This is how security becomes scalable.

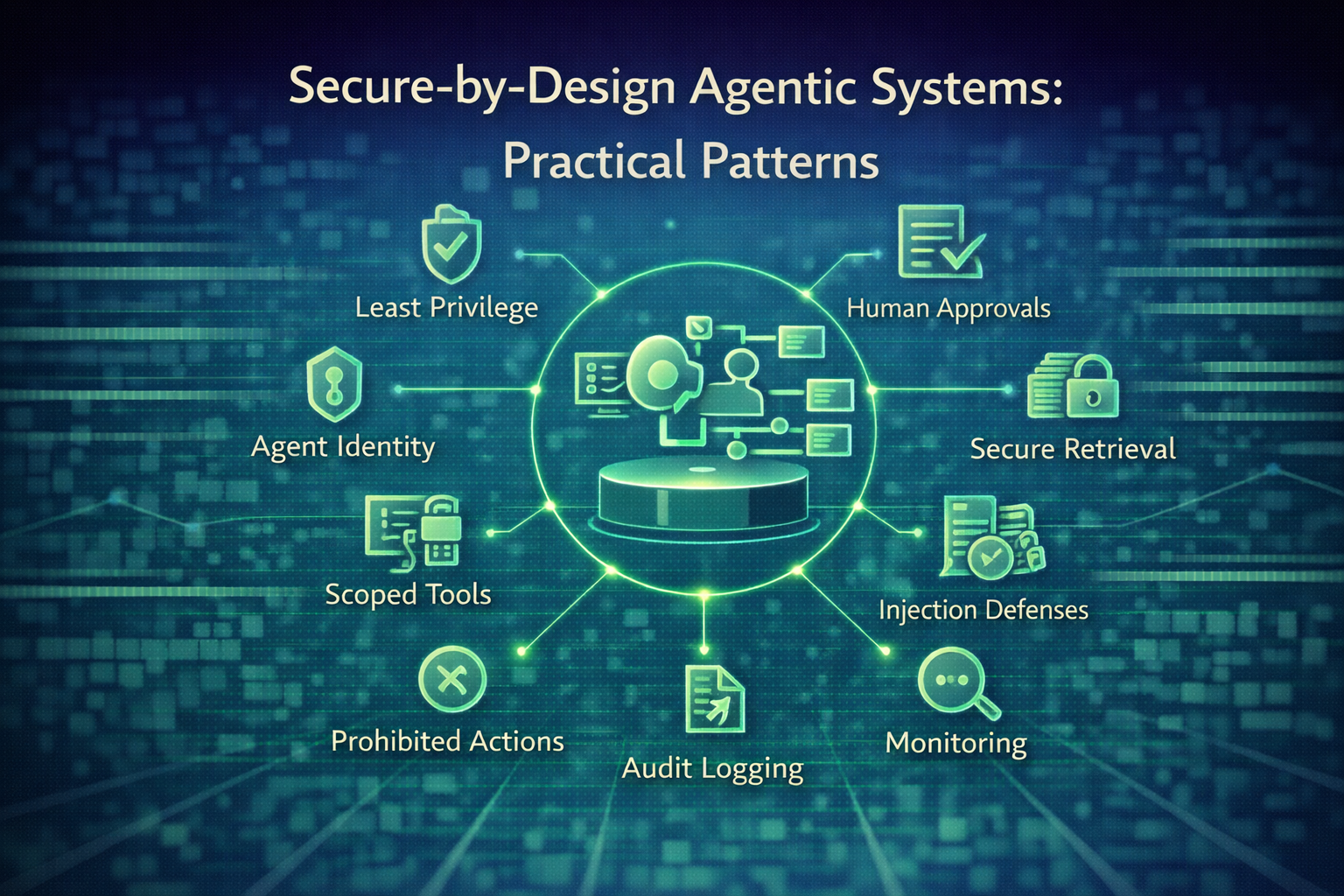

Practical Security Patterns for Agentic Systems

Below are secure-by-design patterns that enterprises can apply immediately. Each is practical and aligns to real enterprise deployment conditions.

1. Least privilege, always

Agents should not have broad access. Permissions must be scoped to the workflow and the role. If an agent supports HR workflows, it should not access finance systems. If it supports compliance evidence collection, it should not write to production databases. Least privilege should apply to:

tools

APIs

data sources

environments

actions

This reduces blast radius and limits exposure.

2. Identity and authentication for agents

Agents should have an identity that is separate from human users. Their identity should be managed like a service account with clear ownership and governance. Every agent action should be attributable. No shared accounts. No ambiguous credentials. No generic keys. This is essential for auditability and incident response.

3. Scoped tool access and whitelisting

Agents should be restricted to approved tools and APIs. Tool access should be whitelisted and workflow-specific. Executives should require:

tool allowlists

parameter constraints

restricted actions per tool

rate limiting

controlled execution environments

This prevents an agent from invoking unintended tools or abusing access paths.

4. Guardrails for prohibited actions

Define prohibited actions explicitly and enforce them automatically. Examples include:

exporting sensitive data

changing production configurations

modifying access controls

triggering financial transactions

publishing customer-facing content

deleting records

The system should prevent prohibited actions even when the agent attempts to perform them.

This moves security from intent to enforcement.

5. Human approval gates for high-risk actions

High-impact actions must require human-in-the-loop approval. Executives should define risk tiers and match approvals to risk. This is especially important for:

actions that affect systems of record

actions that impact customers

actions that touch regulated workflows

actions that change permissions or security controls

This preserves accountability and limits catastrophic error.

6. Secure retrieval and data protection

Agents often rely on retrieval systems. Retrieval introduces risk if data is untrusted, misclassified, or poisoned. Secure retrieval requires:

authoritative sources only

strong metadata and classification

role-based retrieval filters

redaction of sensitive fields

secure embedding and indexing practices

monitoring for retrieval anomalies

Retrieval is a data security problem as much as an AI problem.

7. Prompt injection and tool manipulation defenses

Prompt injection is one of the most common exploitation methods for agentic systems. The agent may be tricked into:

revealing sensitive information

overriding system instructions

invoking restricted tools

executing unintended actions

Mitigation patterns include:

strong system prompt isolation

content filtering and validation

instruction hierarchy enforcement

tool call validation with explicit schemas

sandboxing tool execution

restricting external content ingestion

Executives should treat prompt injection as a real security vector, not a theoretical one.

8. Continuous monitoring and anomaly detection

Agentic systems require continuous monitoring. Monitoring should track:

unusual tool usage patterns

unexpected data access

high-frequency requests

abnormal decision chains

attempts to execute prohibited actions

suspicious retrieval results

escalating error rates and drift

Monitoring must be tied to escalation and intervention. If monitoring exists but no one responds, the system is ungoverned.

9. Audit logging and evidence capture

Every agent decision and action should generate audit evidence.

This includes:

what data was accessed

what tools were invoked

what actions were attempted and executed

what approvals were captured

what confidence thresholds were applied

what outputs were generated

which human owner is accountable

This makes agent operations explainable and defensible.

10. Kill-switch and containment mechanisms

Enterprises must be able to stop agent behavior quickly. This includes:

pause capability

rollback capability

environment isolation

throttling

degradation modes

automated shutdown when thresholds are exceeded

This turns agentic AI into an operational system that can be managed under stress.

The Executive Requirement: Security Must Be an Enabler, Not a Blocker

Executives should not allow security to become the reason agentic AI is delayed indefinitely. But executives also should not allow uncontrolled agent adoption.Secure-by-design is the balance. It enables scaling by making agent operations safe, measurable, and governable. It also prevents a common enterprise failure. Launching agents in uncontrolled ways through shadow IT, unmanaged tools, and inconsistent access patterns. The enterprise must treat agentic AI as a core capability. That requires security discipline early.

Take Aways

Agentic AI introduces active operational capacity. That capacity creates value and exposure. Secure-by-design agentic systems are built on practical patterns: least privilege, agent identity, scoped tools, guardrails, approval gates, secure retrieval, injection defenses, monitoring, audit logging, and kill-switch controls.

The question is not whether enterprises will adopt agents. They will. The question is whether they will adopt agents securely enough to scale. Secure-by-design is what makes agentic AI durable enterprise infrastructure.