Model Risk Management Meets Software Engineering by Mark Hewitt

Enterprise AI is maturing. Many organizations have moved beyond pilots and are deploying models and agentic workflows into core operations. AI is now influencing customer interactions, productivity, decision-making, compliance workflows, and operational monitoring.

As this happens, the enterprise must confront a reality that many early AI efforts avoided. AI systems are not experiments once they are operational. They are production systems. And in production, risk must be managed with engineering discipline.

This is where enterprises encounter a gap. Many organizations treat AI risk as a compliance and policy issue. They rely on legal review, ethics boards, and governance committees. Those elements are necessary, but they are incomplete. AI risk must also be treated as a software engineering issue.

The enterprises that scale AI safely will integrate model risk management into software engineering practices. They will treat models and agent workflows with the same rigor as critical software systems, while accounting for the unique risks of AI behavior.

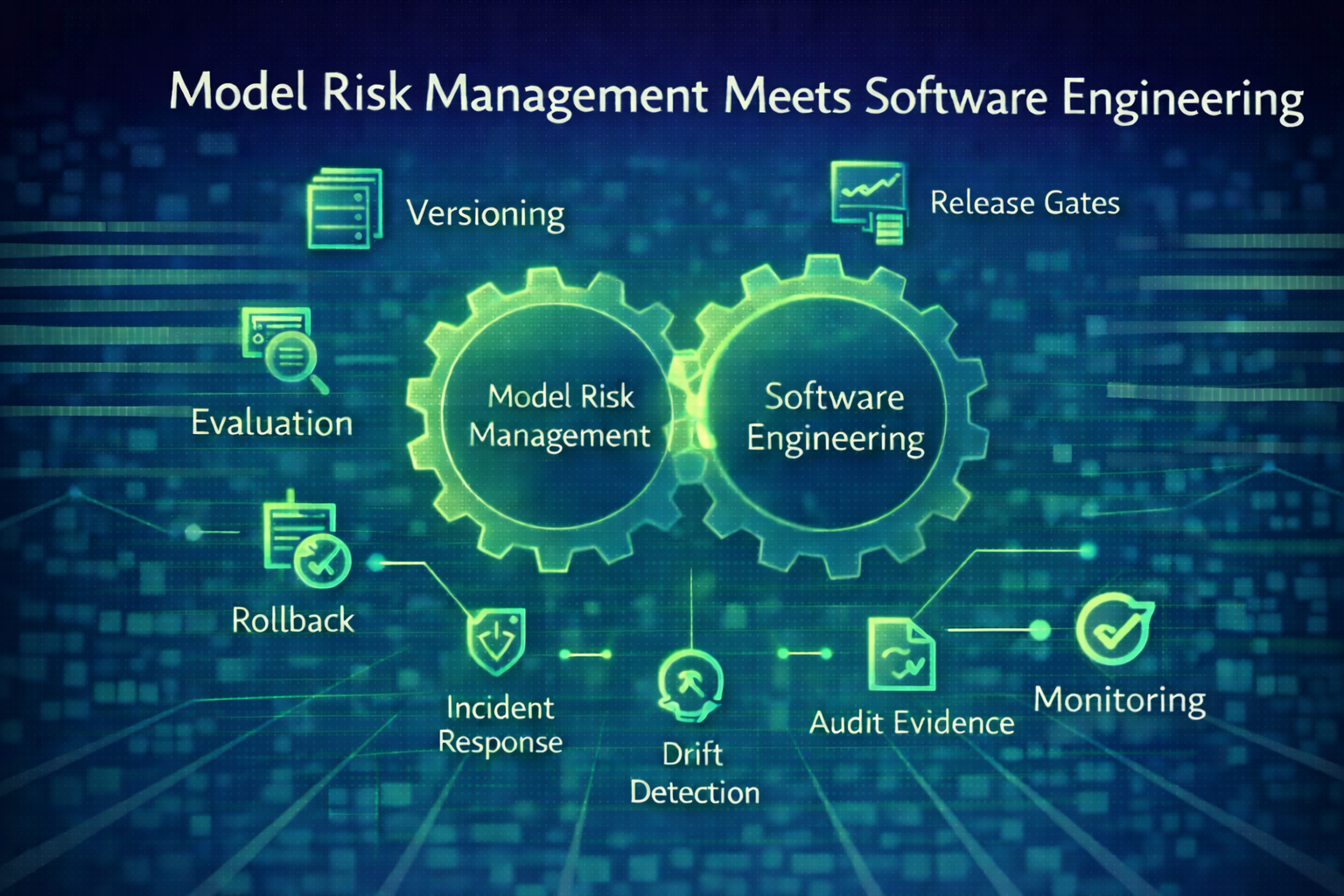

This is the convergence of model risk management and software engineering.

Why Traditional Model Risk Management Is Not Enough

Model risk management has existed for years in regulated industries. It was designed for statistical models in finance, insurance, and risk scoring. The goal was to ensure transparency, stability, validation, and oversight.

Modern AI changes the playing field. AI systems can:

behave probabilistically rather than deterministically

produce plausible but incorrect outputs

drift as data changes and conditions evolve

be sensitive to prompt and context changes

interact with external tools and take action

be difficult to explain in traditional terms

affect many workflows simultaneously

These behaviors are not managed through policies alone. They require systems thinking, observability, testing, deployment discipline, and continuous monitoring. In other words, they require software engineering.

Why Software Engineering Alone Is Not Enough

Software engineering provides strong discipline for production systems. CI/CD pipelines, test automation, observability, incident response, security controls, and reliability patterns are well established. But, AI introduces additional risk dimensions that traditional software engineering does not fully address. These include:

output correctness uncertainty

bias and fairness concerns

data drift and concept drift

prompt sensitivity and prompt injection risks

non-reproducible behavior under changing context

evaluation complexity for open-ended outputs

model behavior that depends on external retrieval and knowledge bases

This is why the enterprise must combine both disciplines. Model risk management provides the governance intent and validation expectations. Software engineering provides operational discipline and control. Together they create scalable, accountable AI.

The AI Risk Profile: Four Risk Categories Executives Must Govern

Executives can simplify AI risk into four categories.

Performance and correctness risk. Does the system produce accurate, useful, and consistent results in real conditions?

Governance and compliance risk. Can the enterprise prove control, explain decisions, and meet regulatory and policy requirements?

Security and misuse risk. Can the system be exploited, manipulated, or used to access unauthorized data and actions?

Operational stability risk. Can the system be monitored, recovered, and maintained without fragility and surprises?

These risks are not static. They change over time. This is why continuous operational control is required.

The Integration Model: How to Embed Model Risk Management Into Engineering

Executives should insist that AI systems follow a production lifecycle that integrates risk management into each phase. Below is a practical enterprise model.

1. Versioning and traceability for models, prompts, and retrieval

Models are not the only moving parts. Prompts, retrieval assets, embeddings, and tool chains also change behavior. Enterprises should implement:

version control for model configurations and prompts

versioning for retrieval sources and indexes

traceability for changes and approvals

reproducibility mechanisms for critical workflows

This is foundational for governance, debugging, and audit readiness.

2. Testing and evaluation pipelines

Traditional software tests validate deterministic behavior. AI requires evaluation frameworks. Executives should expect:

baseline evaluation sets tied to workflow outcomes

regression testing for model updates and prompt changes

adversarial testing for misuse and injection

bias and fairness evaluation where relevant

reliability testing for edge cases and uncertainty

Testing must be continuous. AI can degrade as data shifts and usage expands.

3. Deployment discipline and gating

AI systems should not be deployed through informal processes. Enterprises should require:

CI/CD pipelines that include evaluation gates

risk-tiered approvals before deployment

staged rollout and canary release patterns

rollback capability for model and prompt changes

guardrail validation before activation

This brings AI into the discipline of modern software delivery.

4. Runtime monitoring and drift detection

AI behavior must be monitored like production systems. Monitoring should include:

output quality trends and error rates

confidence scoring and uncertainty patterns

drift indicators for data sources and retrieval content

anomaly detection for behavior shifts

tool usage monitoring for agentic workflows

security monitoring for unusual patterns

business impact metrics tied to workflows

Drift is inevitable. The enterprise advantage comes from detecting and correcting it early.

5. Incident response and escalation for AI

Many enterprises lack a playbook for AI incidents. Executives should require AI incident response discipline:

clear ownership and on-call responsibility

escalation triggers tied to thresholds

kill-switch capability for agents

rollback procedures

post-incident review focused on improving controls

structured remediation pathways for data or model issues

AI incidents are operational incidents. They must be managed as such.

6. Evidence capture and audit readiness

Executives must be able to prove control. This requires automated evidence capture:

logs of decisions, outputs, and actions

data lineage visibility for retrieved context

records of approvals and governance gates

monitoring reports and drift indicators

exception logs and intervention actions

Evidence must be built into the system, not assembled manually when requested.

The Executive Shift: From Models to Systems

Enterprises often focus on model selection. They ask which model is best, which provider is safest, and which tool is most advanced. Those questions matter, but they miss a more important point.

Enterprise advantage comes from systems, not models.

AI systems include models, prompts, retrieval, workflows, governance, monitoring, and ownership. Risk lives in the entire chain.

Model risk management meets software engineering because enterprises must govern the entire chain.

This is also why engineering intelligence becomes foundational. Engineering intelligence provides observability, traceability, and operational control across the AI system lifecycle.

A Practical Executive Starting Point

Executives can begin integrating model risk management into engineering through five steps.

Define risk tiers and required controls for each tier

Establish standardized versioning and evaluation pipelines

Embed governance controls into CI/CD workflows

Implement runtime monitoring and drift detection for all AI systems

Create AI incident response pathways with ownership and escalation

This approach turns AI governance into repeatable operational discipline.

Take Aways

Enterprise AI is no longer experimental. It is operational. And operational systems require engineering discipline. Model risk management provides governance intent. Software engineering provides control and reliability practices. Together they create scalable, safe AI adoption.

Enterprises that treat AI as production software will scale with confidence.

Enterprises that treat AI as an ongoing experiment will remain constrained by trust, risk, and inconsistency.

This is the executive opportunity.

Bring model risk management into software engineering, and make AI governable at scale.