Understanding LLMs Through Improv: Why System Prompts Matter by Dakota Kim

Your AI chatbot that worked perfectly last month suddenly writes verbose responses. Your competitor's bot handles the same questions completely differently. ChatGPT and Claude give you different answers to identical prompts.

These aren't random quirks. They're all caused by the same thing: system prompts. Every AI model you interact with is following invisible instructions that shape its entire personality and behavior. And when you understand how these work, you can finally control what you get out of AI tools.

The Improv Analogy

Interacting with an LLM is like walking into an improv theater. You shout suggestions, the performer responds. But before you arrived, the performer got backstage instructions: "Tonight's show is corporate-friendly. Keep it professional."

These backstage instructions are system prompts: invisible rules shaping every AI interaction.

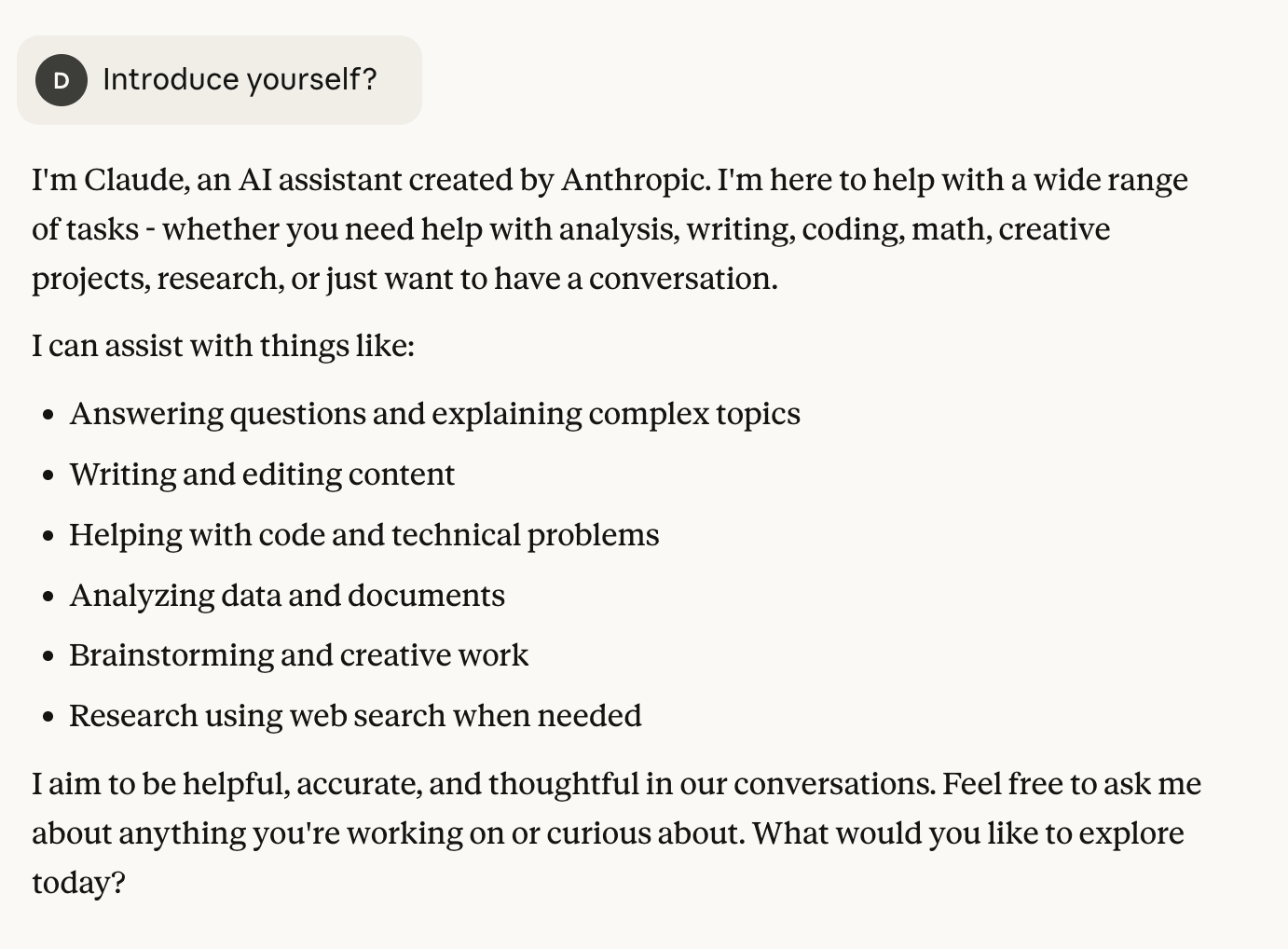

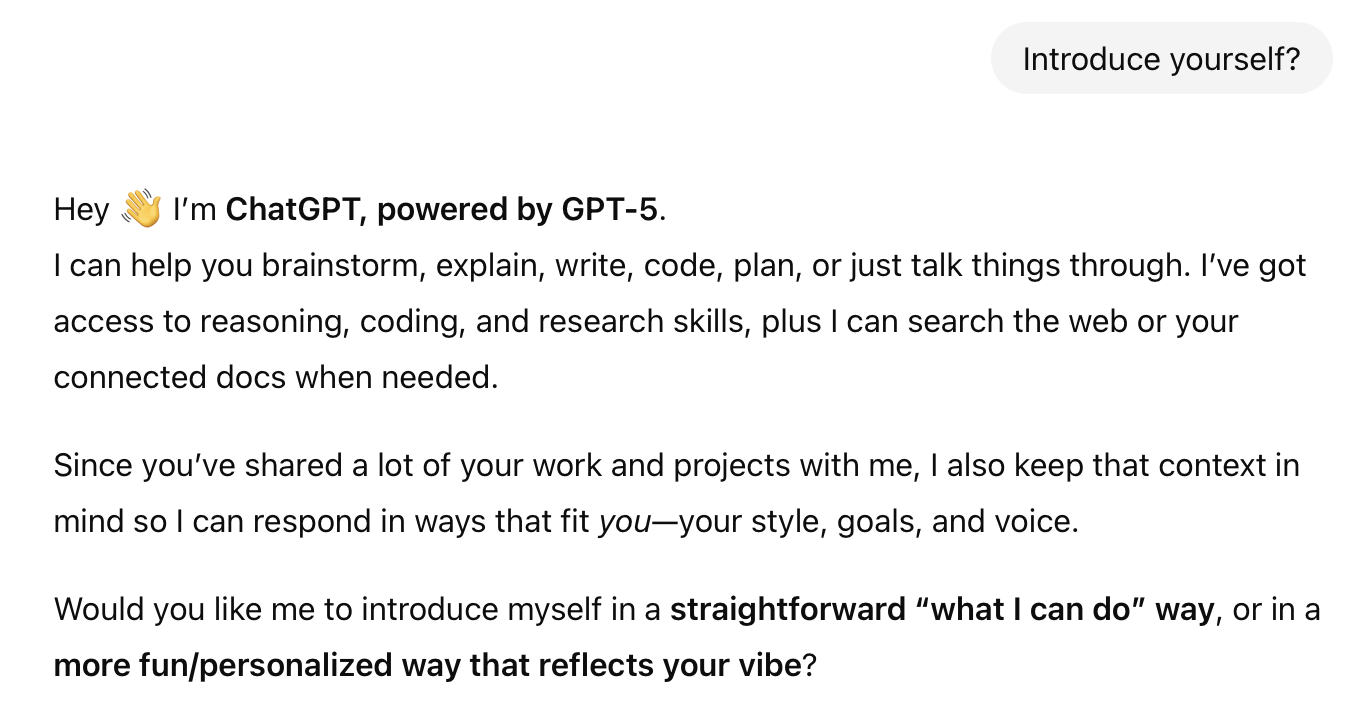

Try it out: start a new chat with a few LLMs. and prompt to “Introduce yourself?”

Just from a simple introduction we can begin to see how the System Prompt sets up our conversation. The AI knows its role and the system prompt also provides info about how to help the user!

How System Prompts Shape Behavior

Every LLM interface starts with a system prompt, from simple ("You are a helpful assistant") to complex multi-paragraph behavior definitions.

Time for a Thought Experiment: Consider a customer support bot with these instructions: "You represent TechCorp. Always acknowledge the customer's concern first. Provide solutions as numbered lists. Never mention competitors."

When OpenAI releases GPT-5 and the company upgrades, expecting improvements, something unexpected happens. Perhaps the new model interprets "acknowledge concerns" in a different semantic capacity, adding two extra sentences of empathy before each solution. The numbered lists become more detailed. What used to be crisp 30-second responses balloon into minute-long explanations.

Key insight: Model upgrades don't automatically improve your AI application. The model and system prompt work together to create behavior!

The Technical Reality

LLMs have no memory between conversations. Zero. Each interaction starts fresh. The model doesn't know you, yesterday's chat, or your preferences unless explicitly provided in the current context window. This isn't a bug; it's the architecture.

This explains why:

Today's ChatGPT conversation has no connection to yesterday's

The same prompt produces different results across platforms

Switching providers means adapting to new "house rules"

Engineering Around Limitations

This statelessness seems limiting, but engineers have gotten creative.

ChatGPT's "memory" feature? It's not actually remembering. It's likely using RAG (Retrieval-Augmented Generation) to fetch relevant context from previous conversations and inject it into the current prompt. The illusion of memory through clever engineering.

Agentic systems that reason step-by-step? They're built by acknowledging these limitations and creating software scaffolding that "sets the stage" repeatedly, feeding outputs back as inputs with new instructions.

Grokking and embracing these fundamental realities about the tooling can help empower you to make better decisions while working with this technology.

Wait…This sounds familiar….

You're already using system prompts, even if you don't realize it:

ChatGPT's Custom GPTs: Each one is just ChatGPT with different system prompts

Claude's Styles: Preset system prompts for different writing tones

Cursor's rules: Your

.cursorrulesfile is a system prompt for AI codingAGENT.md: Repository-specific instructions for the AI

Understanding this unlocks massive value. Instead of hoping the AI "gets" what you want, you can explicitly set the stage.

The Bottom Line

System prompts are your hidden control panel. Ignore them at your peril. Many providers publish theirs, and you can often find them via a search engine or AI-enthused circles.

Model updates can break your applications. Always test with your specific prompts. OpenAI even provides Evals tools specifically for testing prompt behavior across different models.

Different platforms = different directors. It's not just different models, it's different instructions.

Statelessness is a feature, not a bug. Engineer around it with RAG, context management, and clever prompting.

You're already using this. Every AI tool you touch has system prompts! Now that you’re aware of ‘The Man Behind the Curtain' you can navigate using them more confidently.