MCP + NLWeb: Talk to the Web (Part 2) by Seth Carney

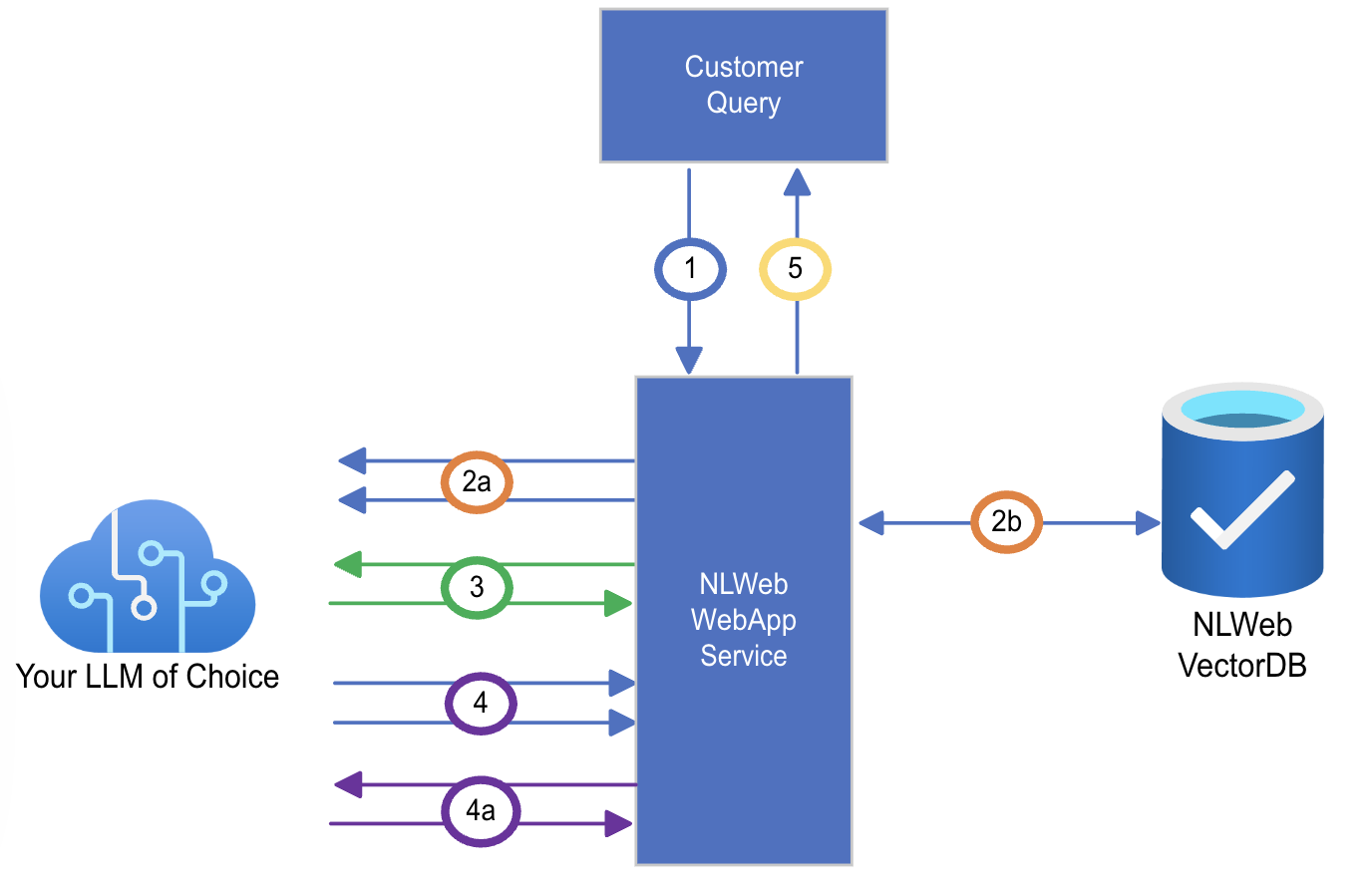

“Life of a Chat Query” diagram by Microsoft, from the NLWeb GitHub repository, licensed under the MIT License.

Microsoft has introduced NLWeb, a bold new standard designed to transform how users interact with websites by enabling natural language interfaces powered by each site's preferred model and data. This initiative could mark a turning point in the agentic ecosystem, bringing us closer to a web where intelligent agents navigate and act on our behalf. Still, adoption remains an open question.

Platforms like Yelp or Google Maps may choose to keep users within their own ecosystems and interfaces. In contrast, companies such as Uber and Expedia may be more interested in the opportunity to let agents handle tasks like booking rides or planning travel.

NLWeb makes use of web standards like RSS, Schema.org , and RDF in order to feed data into a vector databases for storage. It exposes tools to load this data that adheres to the described formats, making the process relatively easy. From there, LLM powered tools can be used to query the data. This allows both users and AI agents to interact with a site in a natural way.

The mentioned data standards & NLWeb were both designed and developed by the same man, Ramanathan V. Guha. Other than the described data formats, and the code that controls the core service to handle natural language queries, the rest of the project is built with customization in mind. LLM’s, vector databases, and UI frameworks can be swapped out agnostically.

It was incredible to see Guha present the repo and give a local demo of its capabilities. He was quick to mention how young the project still is, and welcomed open source contributions. Like any project in development, there are bound to be bugs & issues that need to be addressed. Ongoing contributions from Microsoft and others aim to deepen NLWeb’s interoperability with external systems.

What is fascinating about instances of NLWeb applications, is that they are also inherently MCP servers. Meaning any NLWeb can be directly interacted with via agentic AI tools as long as it is configured to do so. One of the best analogies I heard to describe the project was:

MCP is to NLWeb what HTTP is to HTML.

Just as HTML defines a webpage’s structure and HTTP enables its delivery and interaction over the internet, NLWeb provides a structured framework for users to interact with websites using natural language. MCP acts as the underlying protocol that powers these interactions, managing communication between the language model and the necessary tools or services. In essence, MCP transforms NLWeb’s declarative commands into real, executable functionality.

It will be interesting to see how much traction and popularity this standard gains. There are a number of organizations that are already utilizing and contributing to the project, which can be seen in the official Microsoft announcement. Personally, I think that NLWeb is a step in the right direction for the future, but it will be quite some time before this becomes a widely adopted standard across the web. It can be really difficult to implement and support new standards like this on a large scale, even with significant resources dedicated to the task.