Ensuring AI Governance: The Role of Human Observability by Mark Hewitt

Made by Mark Hewitt ‘s AI Collaborator, Zeus

Artificial intelligence is no longer confined to research labs or experimental projects. It has become a core enabler of enterprise transformation, driving efficiencies, improving customer experience, and opening new avenues of growth. Yet with that power comes a growing responsibility. Enterprises are under increasing scrutiny from regulators, customers, and the public to ensure that AI systems operate transparently, fairly, and ethically. At the heart of this challenge lies one critical principle: observability.

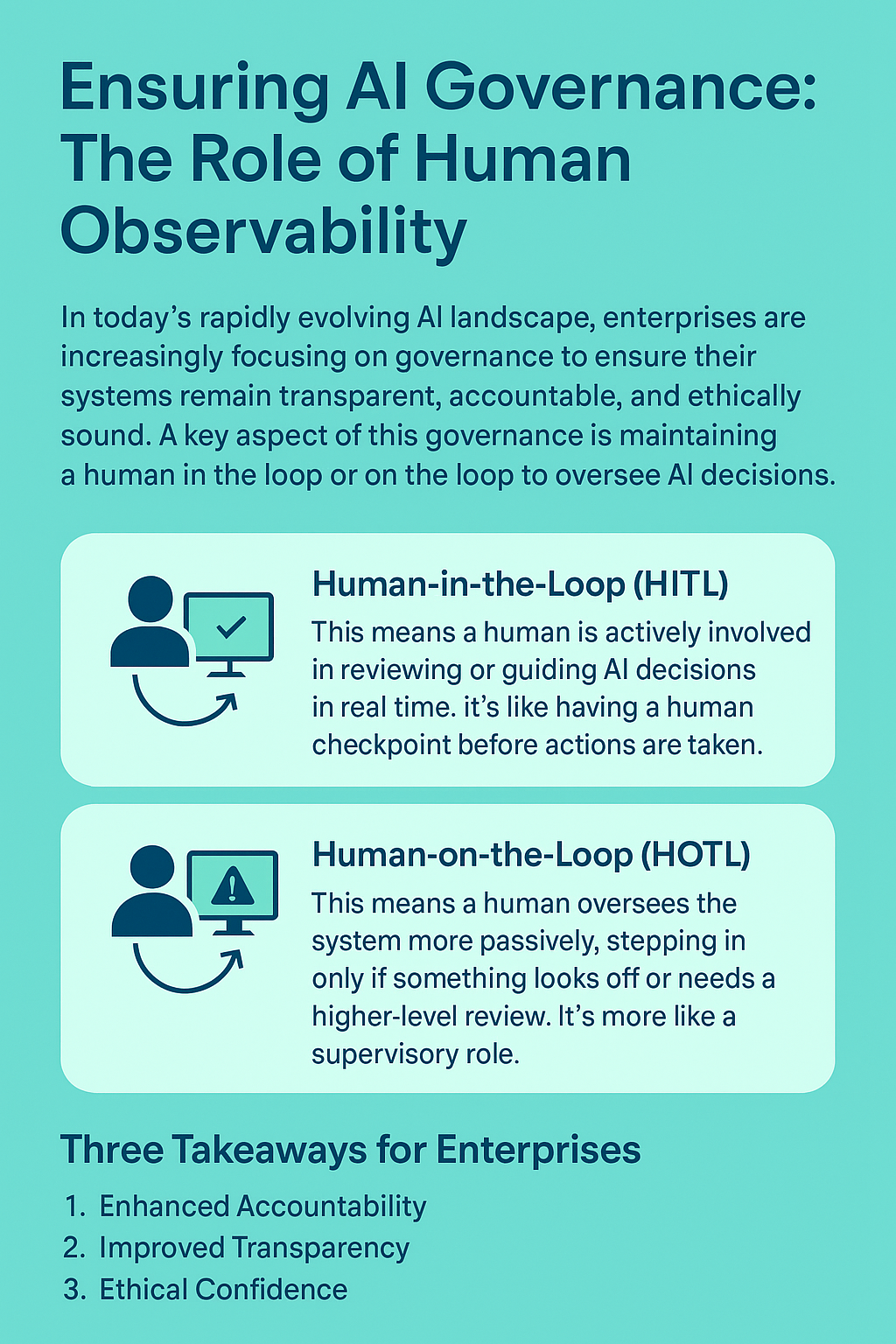

Observability in AI governance means ensuring that humans remain engaged with the systems they build and deploy whether through direct intervention (human-in-the-loop) or supervisory oversight (human-on-the-loop). These practices prevent AI from becoming a “black box” and ensure that automated decisions align with human values, regulatory requirements, and enterprise goals.

Why Observability Matters Now

AI’s growing influence in high-stakes areas, be it in finance, healthcare, supply chain, or customer engagement, makes governance non-negotiable. Without robust oversight, enterprises risk biased decisions, compliance failures, or reputational damage. For instance, automated loan approvals without proper human review may inadvertently discriminate against certain groups, while unchecked algorithms in healthcare could introduce unsafe recommendations.

Observability helps address these risks by ensuring that AI decisions remain explainable, traceable, and auditable. It provides the framework for enterprises to respond confidently to regulatory audits, stakeholder concerns, or customer challenges.

Defining the Loop: HITL vs. HOTL

Human-in-the-Loop (HITL). A model where humans directly review, adjust, or approve AI-driven outcomes in real time. This approach is essential in domains where precision and ethical considerations are paramount, such as medical diagnoses, defense systems, or financial approvals. HITL offers the strongest guardrails but can slow down workflows if applied indiscriminately.

Human-on-the-Loop (HOTL). A more supervisory approach, where humans monitor AI systems from a higher level and step in only when anomalies, risks, or unexpected behaviors occur. HOTL is better suited for large-scale automation where constant intervention would be impractical, such as monitoring network security or managing customer chatbots.

Successful governance often requires a combination of these models balancing agility with accountability.

Building Enterprise-Ready Observability

Enterprises that aim to integrate observability into their AI strategy should focus on three key elements:

Technical Infrastructure. Logging, dashboards, explainability tools, and monitoring systems that make AI decisions visible and interpretable.

Organizational Practices. Clear workflows for when and how human oversight is applied, ensuring both speed and accountability.

Cultural Alignment. Training teams to understand AI limitations and empowering them to intervene when necessary.

The goal is not to slow innovation, but to ensure AI operates within trusted, ethical boundaries. Enterprises that invest in these practices build long-term resilience while fostering trust with stakeholders.

The Cost of Neglect

Organizations that ignore observability risk more than regulatory fines. They risk erosion of customer trust, brand damage, and costly remediation when flawed AI decisions create public backlash. As AI adoption accelerates, companies that fail to embed observability may find themselves unable to scale responsibly. Conversely, those that prioritize it can position themselves as leaders in ethical innovation.

Three Takeaways for Enterprises

Enhanced Accountability. By embedding human oversight with EQengineered, organizations can ensure AI decisions remain traceable and auditable, reducing risk while increasing trust.

Improved Transparency. EQengineered can help enterprises implement observability practices that make AI processes clear and understandable across technical and non-technical stakeholders.

Ethical Confidence. With governance frameworks grounded in HITL and HOTL, EQengineered enables organizations to innovate confidently while safeguarding ethical standards.

Observability is not a compliance checkbox. It is the backbone of responsible AI. Enterprises that treat governance as a strategic advantage, rather than a constraint, will be best positioned to thrive in a world where AI is both powerful and scrutinized. EQengineered partners with organizations to make this vision real, ensuring that governance becomes not a burden, but a driver of sustainable innovation.

Made by Mark Hewitt ‘s AI Collaborator, Zeus