Are the Increasing Costs of AI Coding Agents Worth It? By Ed Lyons

Image by Ed Lyons via Midjourney

AI coding tools and agents are radically changing the way that software is written, but newer, much more expensive options are often not worth it. Prudence and individual benefits - not hype or industry benchmarks - should determine which ones should be considered.

For more than a year, newer tools have yielded better results, all at a fraction of the cost of doing something manually. But in 2025, the potential cost of using AI coding tools is rising substantially, and faster than improvements in the core models. After a year of always upgrading to the latest and greatest, it is now time to be more careful.

There are now big differences in price among tools. You can use a free coding agent, though there are major limitations. You can use ChatGPT for $20 a month. Cursor is a very popular option that has its own IDE, and also costs $20 a month for individual users.

You can also use a pay-as-you go model for some services. Google’s Gemini Pro is $1.25 per million tokens, and $2.50 per million tokens for longer queries. Claude Code with Claude Sonnet 4 is $3 and $15 per million tokens and Claude Opus 4 is $15 and $75 per million tokens. In my own experience, most of the pay-as-you-go premium options end up being an average of $30-$60 per month.

Then there are new, more capable choices like OpenAI’s Codex agent, which costs $200 per month for the pro version. Pricing on Microsoft’s new Copilot Coding Agent to be released in June is not clear at this time, but it will be much more expensive than what GitHub Copilot was in 2024.

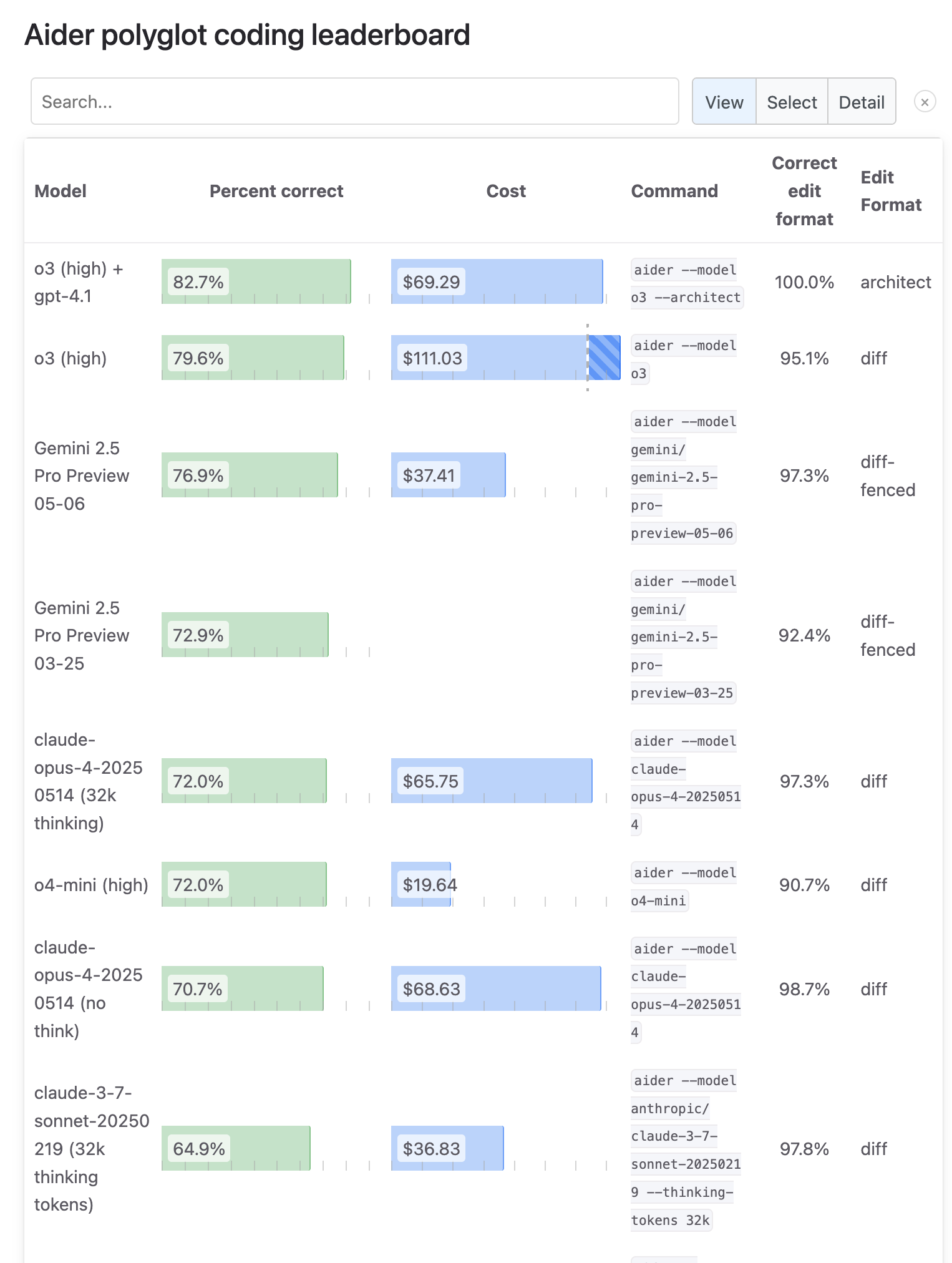

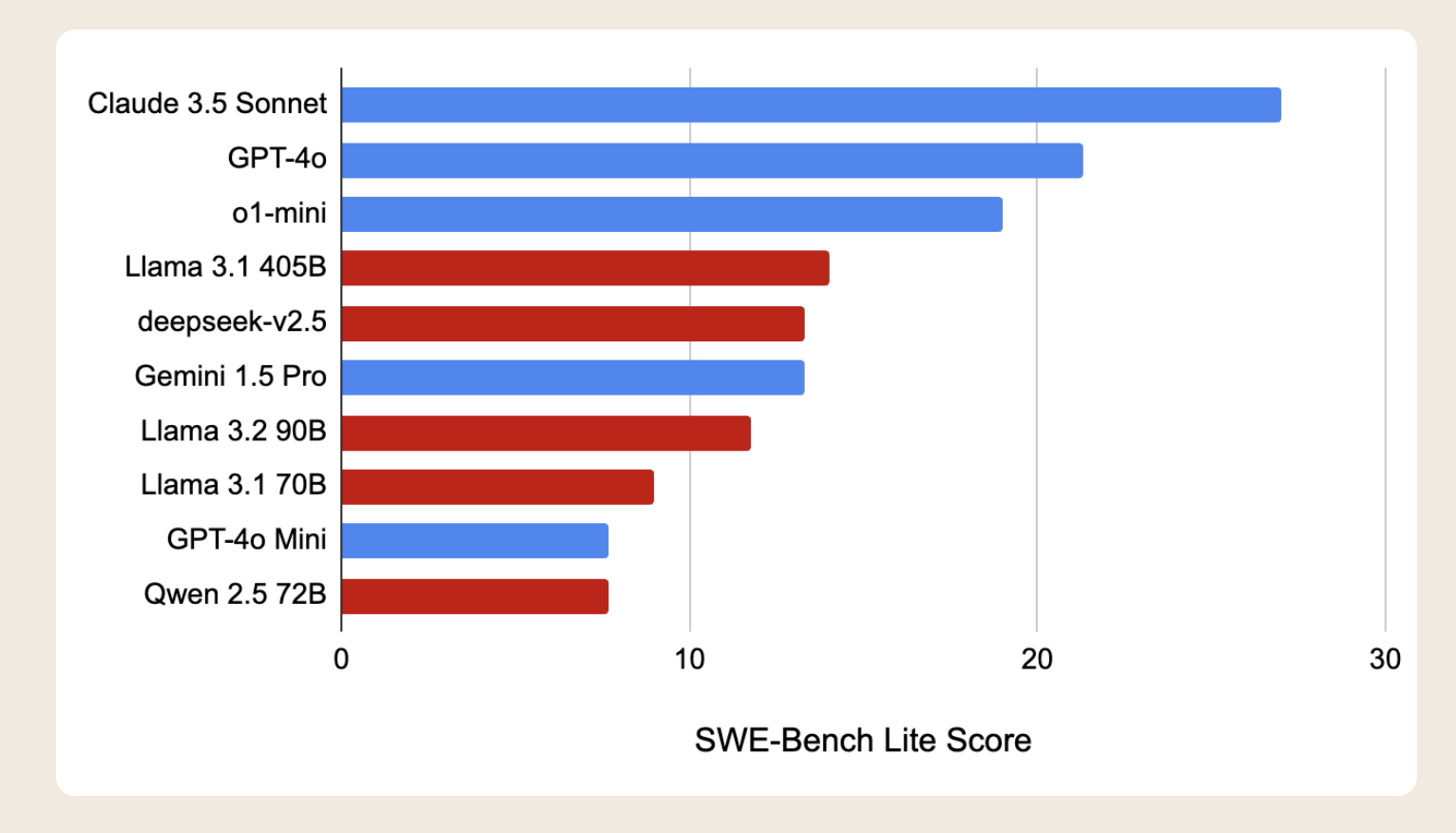

How good are these tools? Does paying more get you more? There are comparisons and leaderboards all over the web. Here are two samples of what you will find:

The top eight contenders in a leaderboard from Aider:

Another from open source agent maker All Hands, with different results:

Many leaderboards produce very different results. To add to the confusion, it is widely known that benchmark accuracy scores often do not align with real-world usage. And not all the tools have the same capabilities.

So how should you choose a tool?

I think of it like choosing a gym. If you pick a gym that you use a lot, the health benefits will be enormous regardless of what kind of facility it is, and it probably does not matter how expensive it is.

If you end up going there rarely, or don’t get a good workout, the money is wasted no matter how cheap it is.

It’s all about what gets you really exercising, not the cost.

In terms of coding AI tools, we are currently in this transition period where there are huge variations in how software developers are using them, if they are even using them at all.

Several considerations will drive how much benefit you get. The tools have different interfaces and features. Some will want something inside their IDE, others, like me, do not. Some want an agent to make pull requests on their own, while others do not. Personal preferences and the ability to customize features matter.

It is most important to start using these tools, and learning how to get more out of them. The cost of not using these tools — or under-using them — will likely be far greater in the long term than using one now that costs more than is necessary. However, for the most expensive choices, some evaluation is necessary.

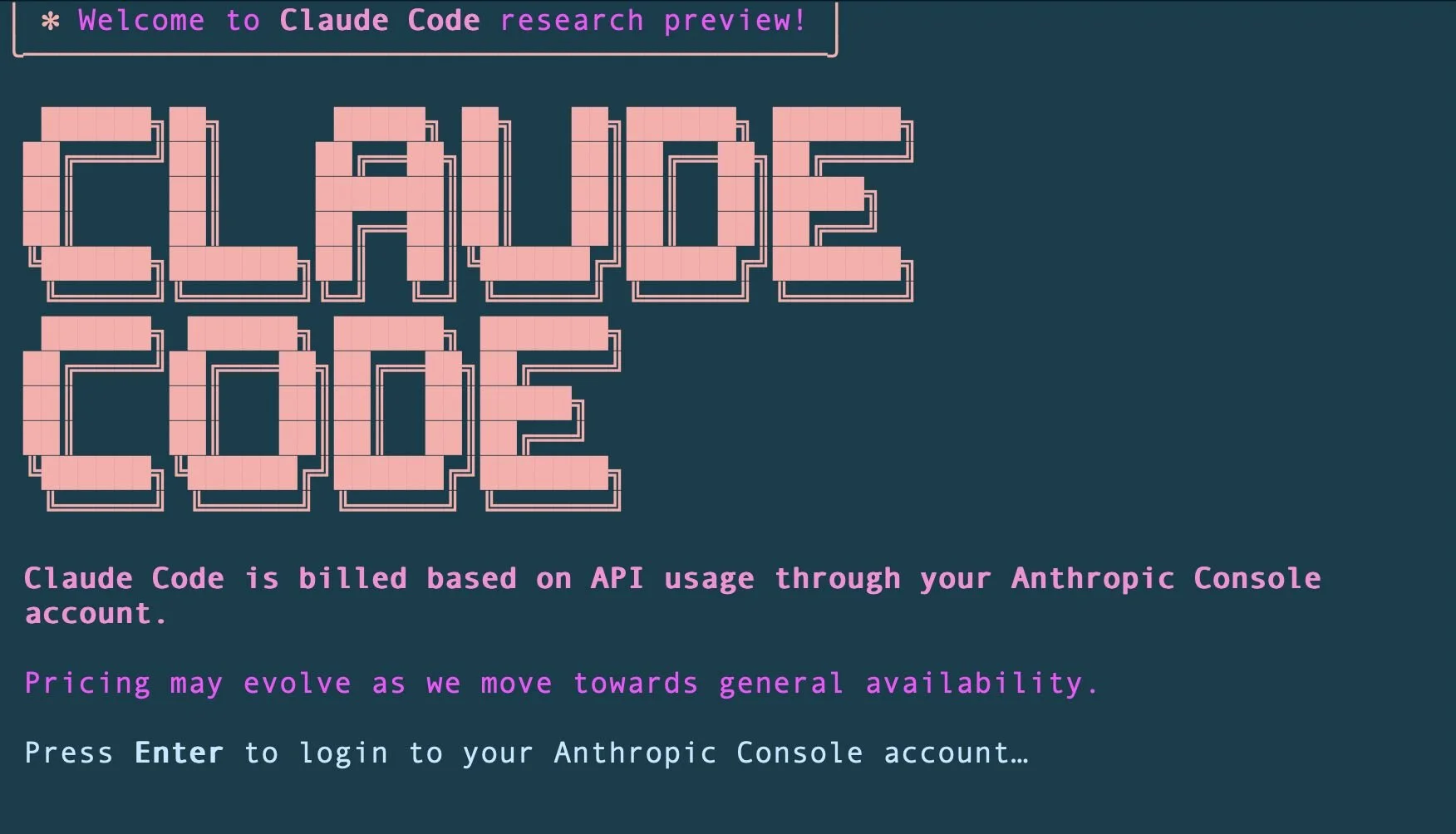

As an example of how to choose a tool and evaluate costs, I will describe my experience with Claude Code and also the recent release of Anthropic’s new premium model, Opus 4.

Last year, I was using AI often, mostly in the form of assistance inside Visual Studio code using GitHub Copilot. It helped a lot, but didn’t feel like a revolution. I was paying around $10 per month. But late in 2024, significant improvements in overall AI led me to re-evaluate coding tools, especially with the increasing popularity of coding agents - which could execute a lot of small programs to handle all types of coding-related tasks.

Using agents challenged a set of personal workflows I had used successfully for decades. I was not in the mood for any of that.

A friend recommended Claude Code, which was available as a command-line tool that lets you describe what you want, and it will run off and execute a variety of commands. He said it was smarter than other coding tools, but it cost significantly more. (In general, using it costs me roughly $40 per month.) I used Claude Sonnet 3.5 as a model, which was highly respected, even if not at the top of most leaderboards.

But Claude Code had this campy, old-school interface and feel that made me comfortable. It actually got me using an agent all the time, and I loved using it.

I also tried Cursor, which was very good as well, but it just didn’t feel right for me, even though it was only $20 per month as a flat fee. (Some friends of mine swear by it.)

You might be able to prove that I could get the same business value out of Cursor for less money. But for an extra $20 per month, I was really into agent coding, and that was what mattered. This was the gym I was finally getting to every day, and having a great workout. The leaderboards and benchmarks did not matter.

In speaking to other developers, there is a feeling that for most people starting to use agent-based coding AI, $40 per month is the point of diminishing returns. However, for heavy and advanced users of agent-based coding tools, I have heard that $100 per month is average, and $200 is not unheard of.

Lastly, as a case of weighing increasing costs versus benefits, I wanted to address the newer, much more expensive Anthropic Claude model, Opus 4, as it costs five times as much as Claude Sonnet.

Anthropic claims Opus 4 is better at coding, and does very well on software engineering benchmarks, especially on long or difficult tasks.

So I picked two different tasks, and compared results and costs of Claude Sonnet versus Claude Opus. (You can toggle between them in Claude Code.)

First, I asked each model to add a set of features to a Javascript application. These features could have been hand-coded in perhaps four hours, and were easy to understand.

For Claude Sonnet, it took 8 minutes and cost $2.34 to implement a good, working version of what I asked for. For Claude Opus 4, it took 23 minutes and charged $18.

I manually reviewed all of the code. There was very little difference between them. Using Opus 4 provided a lot more messages during the analysis, but I saw no additional benefit in the code.

My second exercise was different, and relevant to modernizing an old codebase. I found a workhorse COBOL application that is publicly available on GitHub. About 50K lines of code. I cloned the repo, and removed all of the markdown documentation files in all of the directories.

I then asked both models the following three questions:

Explain this codebase to me

What are the architectural patterns used?

How easily could this be converted to a Java application?

For Claude Sonnet, it took 4 minutes and charged me $1 for a reasonable set of responses. It described what this insurance application did in several lines, it then listed ten kinds of patterns with examples, and where they were used. For the potential migration to Java, it listed the major and minor challenges, what features were in each, and what the key risks were.

For Claude Opus 4, it took 6 minutes and charged me $5 for a set of responses that were noticeably better. The pattern analysis was more sophisticated, and mentioned what programs were involved with each. As for the migration to Java, it was also more detailed. It made choices for Java-specific frameworks and what programming techniques could be used in translation from COBOL. It gave a phased migration strategy, and made an effort estimate for each major piece of the work. Certainly this was not a blueprint for any real-world modernization, but it did show a decent, detailed understanding of the code.

So if I needed to understand an old and poorly-documented codebase, the more costly report would be worth it. But for day-to-day development, Opus 4 is not worth it to me. Perhaps if I wanted to develop a huge set of features or refactor code in a large project, I would pay the premium.

My strategy will be to try out the cheaper option first, get a baseline cost, and then go to a costlier model like Opus 4 if I think it would do better.

In conclusion, I have found there is tremendous value in using agent-based coding tools up to roughly $40-50 per month, as long as you are actively using them. Beyond that point of diminishing returns, you need to be clear about what benefits you are trying to achieve, and whether the tool can accomplish them.